Final Results of TWON

After three years of interdisciplinary research, the TWON, Twin of Online Social Networks project concluded with a final event in Berlin, where the international consortium presented key findings on how online platforms shape democratic discourse and how mechanisms of discourse manipulation emerge in digital environments.

The closing event, hosted at Publix Berlin, brought together researchers as well as representatives from politics, academia, and civil society. Led by the FZI Research Center for Information Technology, the consortium reflected on the project’s results and discussed implications for future research, platform governance, and regulation.

The event was opened by Dr Jonas Fegert, who emphasized the central role of online platforms and their underlying mechanisms in shaping public debate and democratic participation.

In the keynote, Dr Annette Zimmermann explored how platform mechanisms influence discourse dynamics on social media, including practices such as dog whistling and self censorship. She highlighted how these dynamics affect public deliberation and outlined important avenues for future research.

Parsa Marvi, Member of the German Bundestag, underlined the relevance of TWON for understanding democratic discourse in the digital age. He stressed the importance of evidence based research for effective and responsible platform regulation.

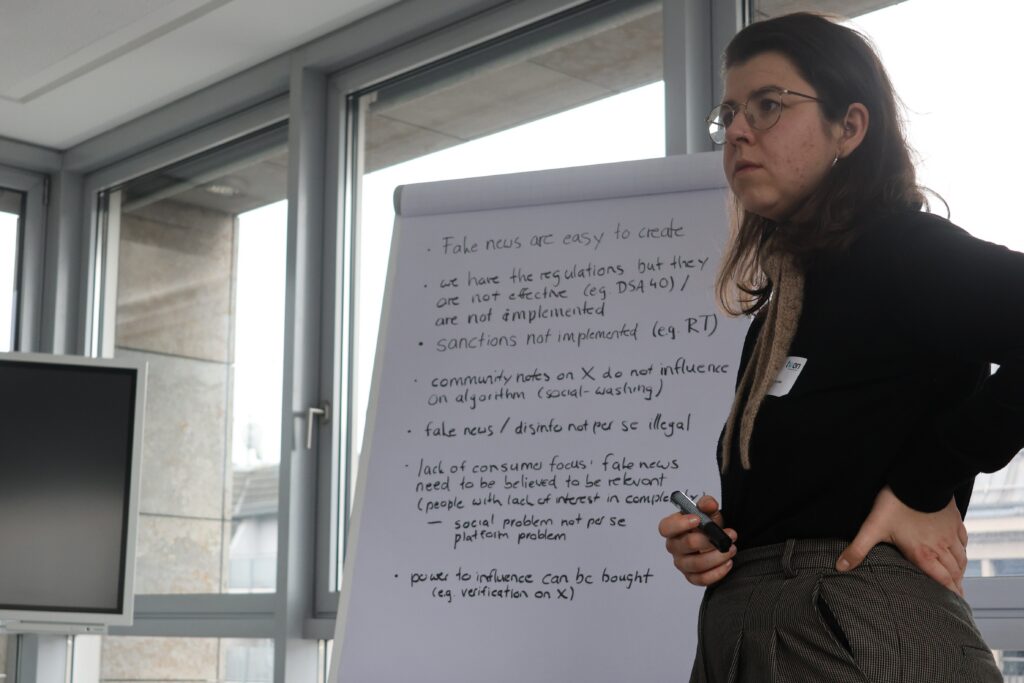

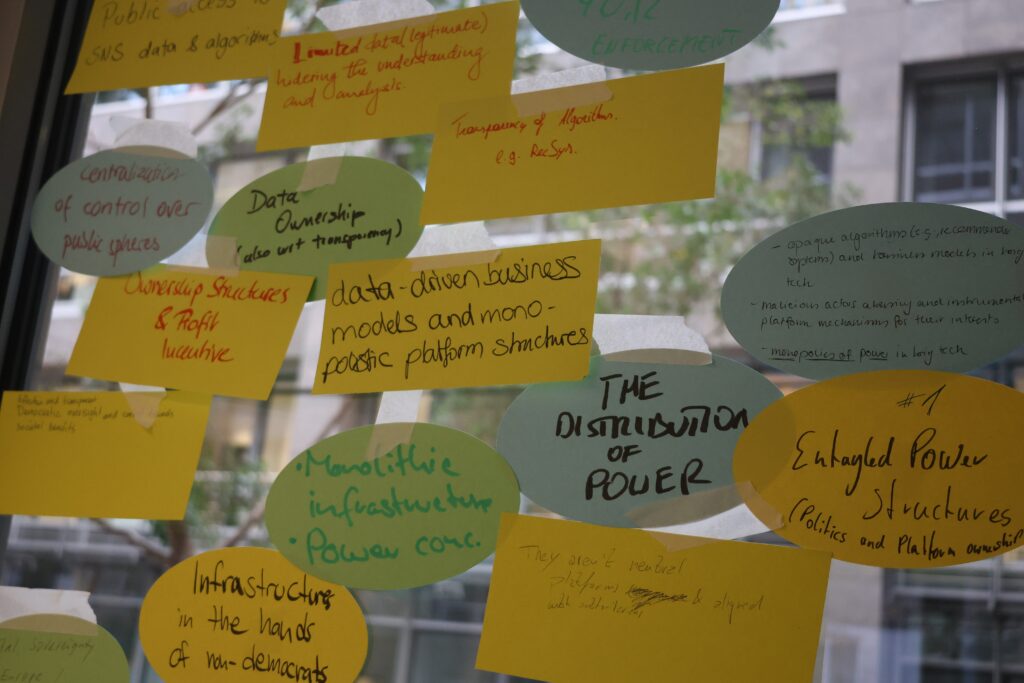

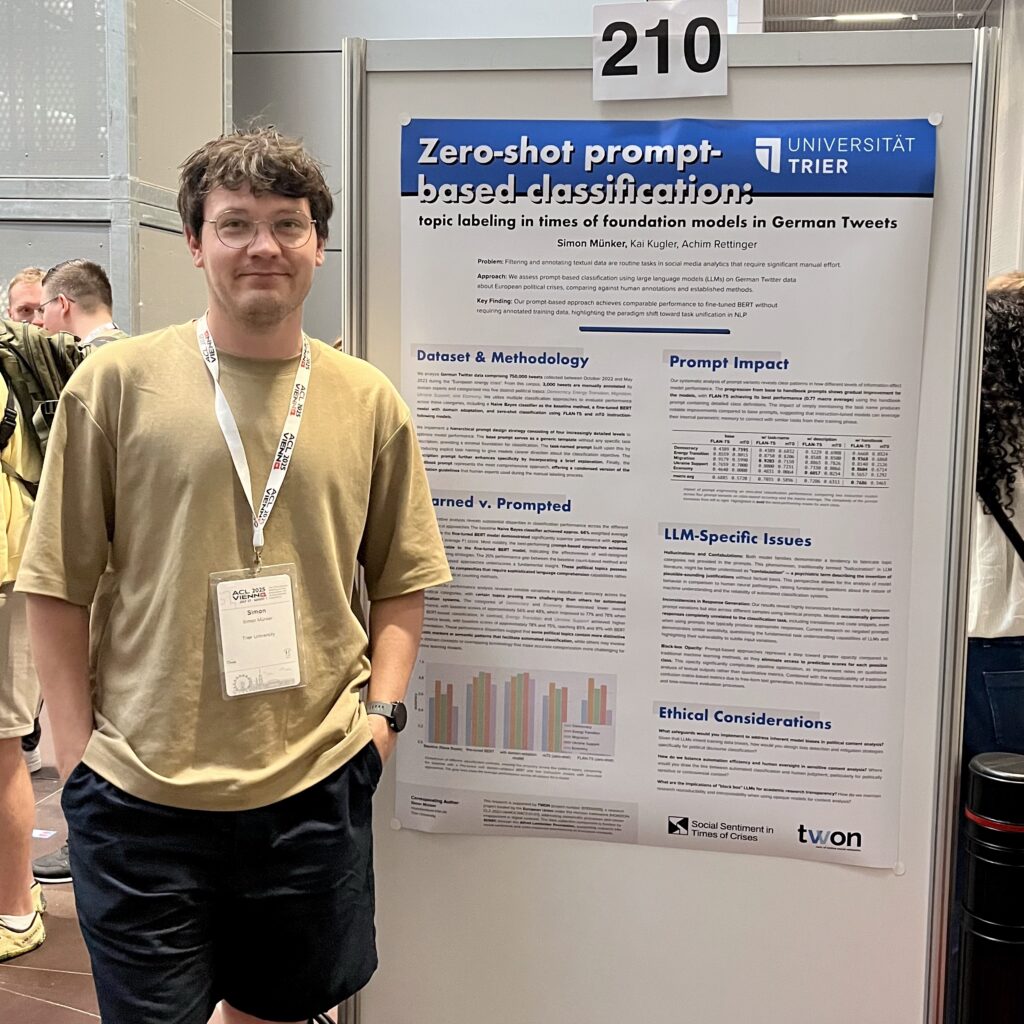

Key research results from the project were presented and discussed in a session moderated by Cosima Pfannschmidt, with contributions from Dr Alenka Guček, Prof Damian Trilling, Prof Achim Rettinger, and Prof Michael Maes, among others.

The event concluded with a panel discussion titled What’s next, featuring Annette Zimmermann, Parsa Marvi, Svea Windwehr, and Damian Trilling, moderated by Jonas Fegert. The discussion focused on concrete recommendations from research, policy, and implementation, particularly in relation to the Digital Services Act, and discussed how online social networks can be held accountable in times of increasing geopolitical tensions.

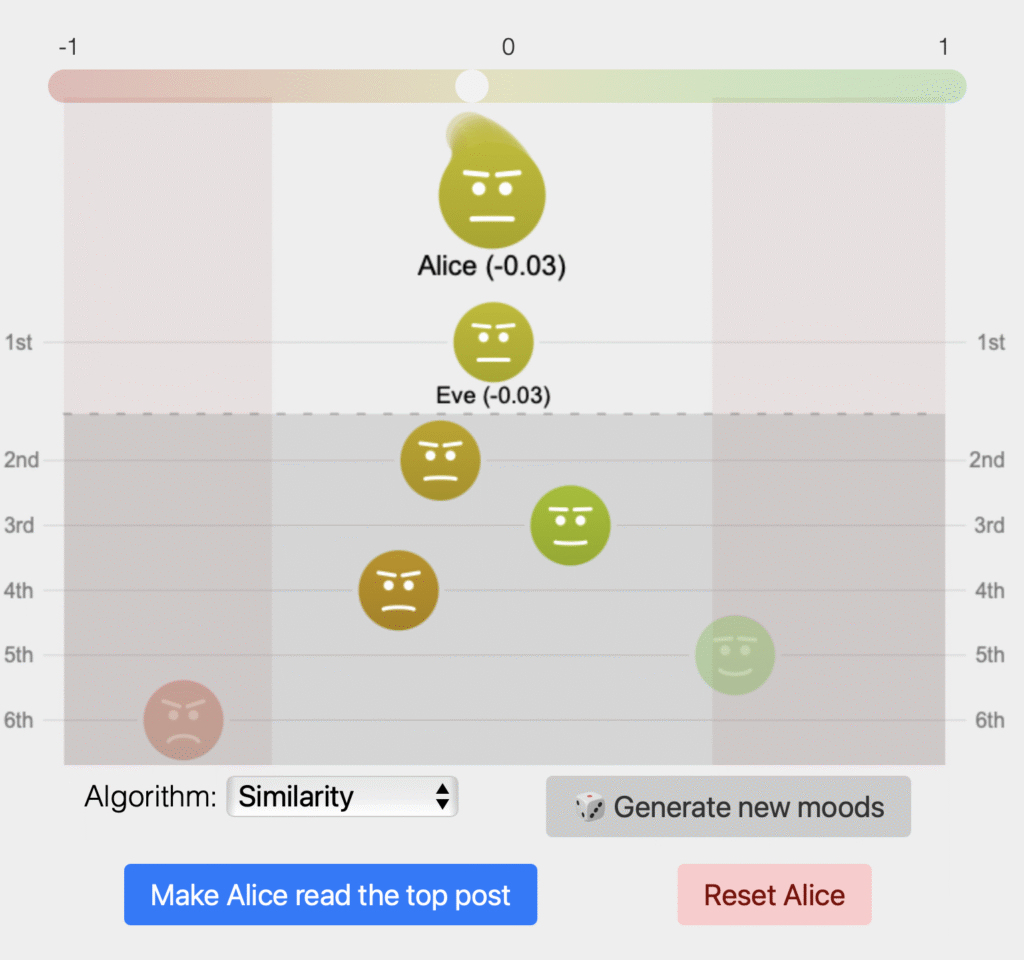

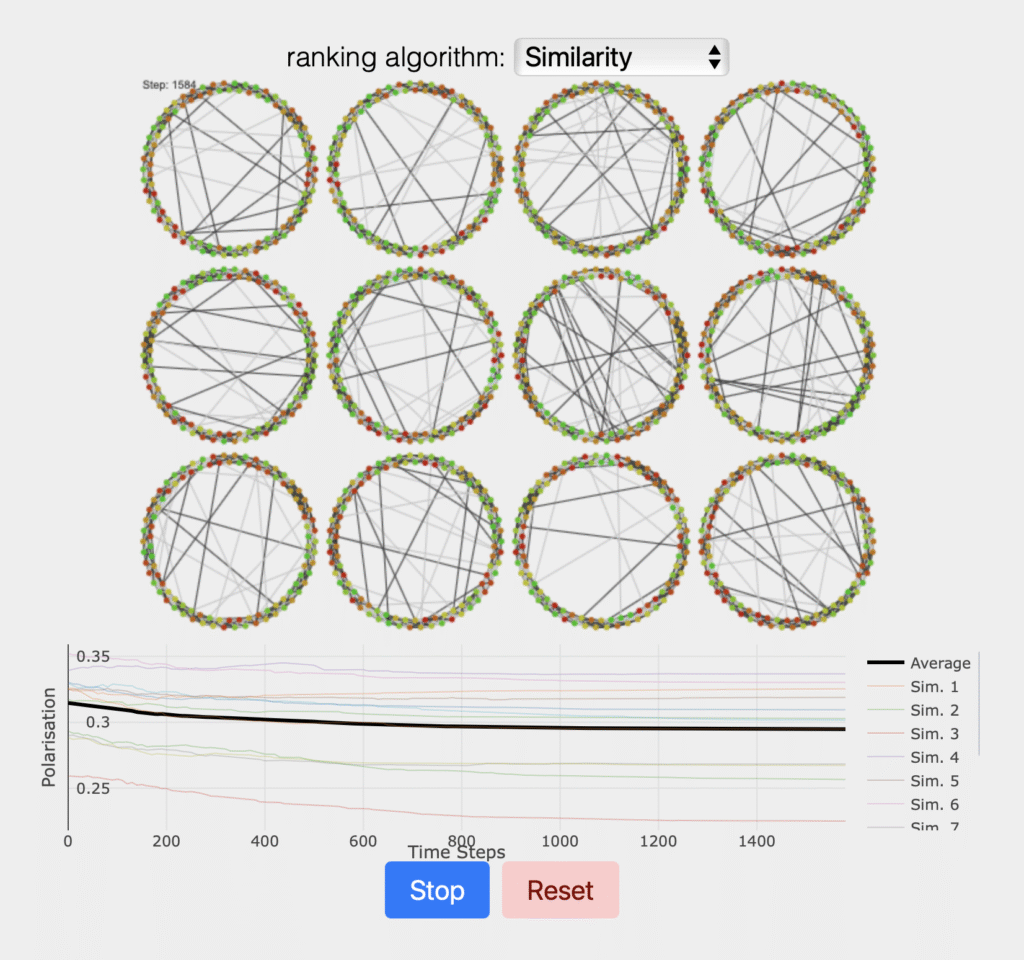

Throughout the evening, participants engaged with interactive project demonstrators, discussed research findings, and exchanged perspectives with TWON partners from across Europe.

TWON thanks all speakers, panelists, participants, and project partners for their valuable contributions and the close collaboration over the past three years.