From Research to Regulation: Rethinking Online Social Networks // January 28, Berlin

📆Date 28 January 2026, 6:00-9:30pm

🎯Location Publix, Hermannstraße 90, 12051 Berlin

What do we know from research about the positive and negative effects of online social networks on societies? How can these platforms be designed to protect and strengthen democratic societies and foster a fair online public sphere? Which research is needed, and how can academia work hand in hand with regulators, civil society, and practitioners to jointly create change? These questions gain particular urgency at a time when global geopolitical tensions, disinformation, and the rise of right-wing extremist forces in many democracies worldwide increasingly shape digital infrastructures.

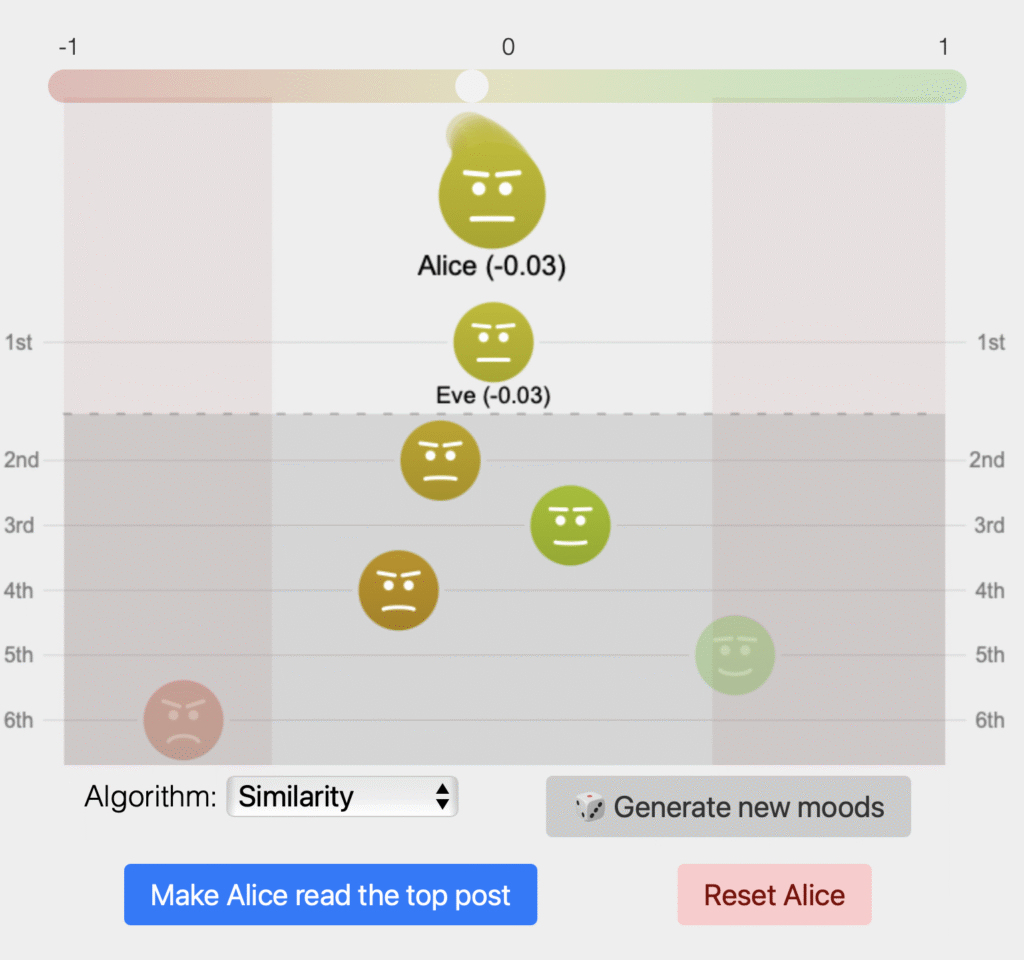

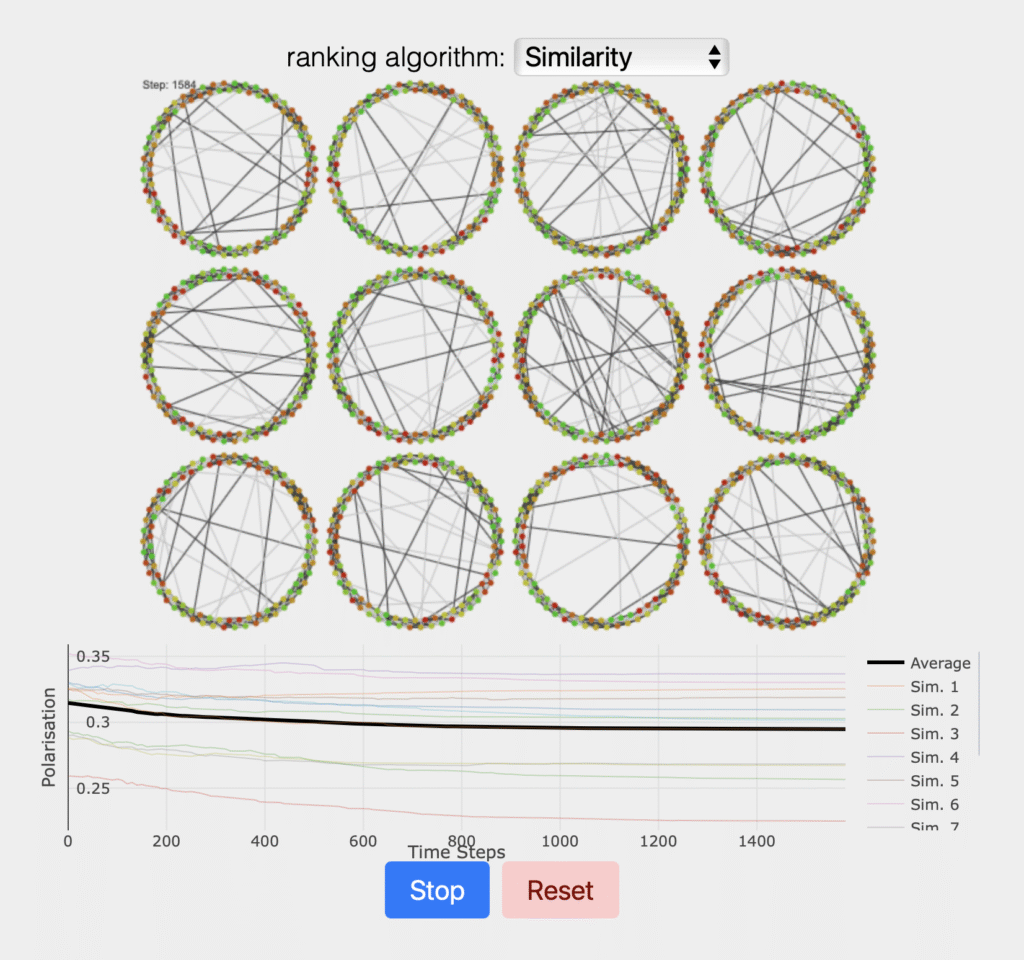

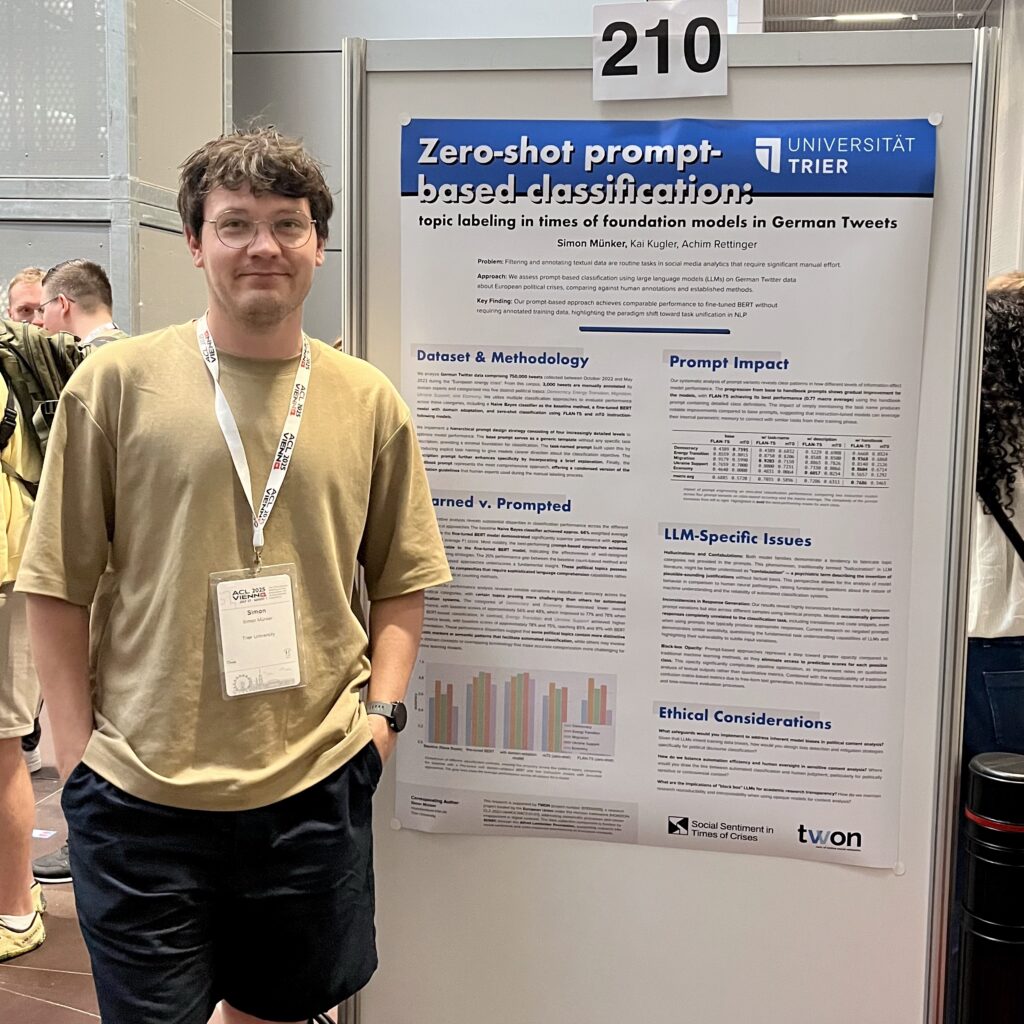

On this evening, we will present the research project “TWON – Twin of Online Social Networks” and discuss its results and implications with policymakers, journalists, and practitioners from civil society. TWON is an EU-funded research project that examines how the design of online platforms influences the quality of democratic online discourse. To this end, an interdisciplinary research team has developed a novel approach to studying online social networks: using a digital twin, simulations are conducted to explore, for example, how different ranking algorithms affect quality of debate, without experimenting on real users. The findings are translated into policy recommendations and discussed in participatory Citizen Labs with citizens across Europe. Members of the consortium include, among others, the Karlsruhe Institute of Technology (KIT), University of Trier, FZI Forschungszentrum Informatik, University of Amsterdam, University of Belgrade, Jožef Stefan Institute, and Robert Koch Institute (RKI).

Furthermore, the event will focus on how online social networks can be researched and shaped at the societal level. In particular, we will discuss promising avenues for future research and evidence-based policymaking, such as data access under the Digital Services Act (DSA), data donation frameworks, and current windows of opportunity in the European and global digital policy debate.

Before and after the stage program, guests are invited to explore interactive project demonstrators, engage with research results at poster stations, and connect informally with project partners from across Europe.

Proposed agenda:

17:30 – Arrival and Demonstrator & Poster Walk

18:00 – Opening: Jonas Fegert, TWON/FZI

18:15 – Impulse: Andrea Lindholz MdB, Vice President of the German Bundestag

18:30 – Keynote: Annette Zimmermann, University of Wisconsin-Madison

18:45 – Presentation of TWON project results

19:10 – Panel discussion

Annette Zimmermann, University of Wisconsin-Madison

Svea Windwehr, D64

Damian Trilling, TWON/University of Amsterdam

19:55 – Audience Q&A

20:10 – Buffet and Demonstrator & Poster Walk

We would be delighted to welcome you in Berlin and look forward to an open and productive discussion with you!